20. October 2017

Optimize CI build on AppVeyor with a multi-stage image

Part of the appeal of Docker as part of a CI/CD pipeline is the idea that you have identical dev, test, qa and prod images or at least as close as possible to identical. However there are drawbacks to that approach as building software often requires more libraries and tools than running it (often called the “toolchain”). It doesn’t make sense to have that in your production image because of size and maintenance needs. If you only have exactly what you need, you only have to patch and maintain what you need and you only need storage for that. Consider a “traditional” Windows Server where e.g. if a security problem in the Desktop subsystem happens, you probably want to patch the Server although you might never log in and just administer it through PowerShell1. As Windows Containers are built on Windows Server Core (or Nanoserver) we already are quite good but we will probably still add our toolchain for compilation which we actually don’t need in production. How can we solve that?

Multi-stage images in general

In releases of Docker prior to 17.05 the common solution for this was to have two different images, one for building your solution and one for running. That was a good solution but it required you to create and maintain two images, which also wasn’t really what you wanted to do. For that Docker came up with something called multi-stage builds. What that basically allows is to use multiple images in one Dockerfile, each usage called a “stage”. Using those you can have one Container based on Windows Server Core including all the libraries you need for building your solution and then “re-base” it on an empty Windows Server Core where you just put in your compiled application and the minimal dependencies for production. If you want to learn more about it, check out this article in the official Docker documentation based on examples in a blog post by Alex Ellis, another recommended follow on Twitter2. Why is that relevant in NAV you might think if you know NAV because Dev and Production use the exact same architecture and putting .NET integration aside, nothing is special in Dev.

Just as an explanation for Non-NAV people, skip this if you already work with NAV: Traditionally in NAV the code was and probably is for 99.9% of all installations part of the database and for a very long time the end user client was the same as the development client. Therefore the toolchain also was part of an enduser installation and production environment. Everything you needed to run, you also needed to compile. There were and are a number of corner cases but in the end that didn’t make a dramatic difference.

My multi-stage image for NAV

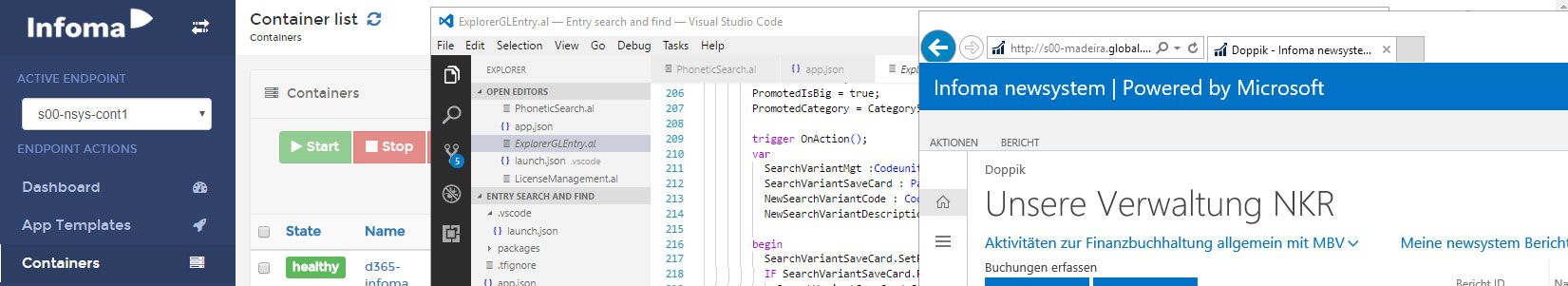

But with the new NAV dev environment aka Visual Studio Code, code no longer is in the database, but in files. And even more important, you dont’t need a database and a middle tier server anymore to compile. With that in mind I decided to optimize my CI sample with AppVeyor: It originally created a full NAV installation, probably also including a SQL Server, just to call the compiler, because in the bad old days, you absolutely needed that. Now we only need the new compiler (alc.exe), the symbols (something like the interface to all NAV objects) and our project. To achieve that I created a Dockerfile and a set of scripts which you can again find here that is in the first stage a full blown NAV install including a SQL Server. That container is used to transfer all the (few) necessary bits into the next stage. Then we run that second, way smaller container (the new NAV toolchain is less than 100mb) and compile our source code in it. How does that look like?

The Dockerfile has two parts: in the first one a regular NAV container is started, I just override Mainloop.ps1 to avoid running it endlessly and also allow me to copy all necessary components into a folder. The second step then is based on Windows Server Core again and just takes those components but everthing else from NAV ist gone. In the end we have a Windows Server Core with the minimal NAV part that allows us to compile. That also makes the AppVeyor build.ps1 file a lot easier as it now doesn’t need to take care of running environments and getting the nessecary components out but just run the Container and call a build script inside it. With that change the AppVeyor build also only needs to download a very small layer of NAV based on Windows Server Core, which makes the build time go down from 10 to 5 minutes. That still is quite a lot, I would guess because the disks are quite slow. If I do the same on my machines, it takes less then a minute to pull, run and compile and if I already pulled it, then run and compile take about 20 seconds

If you want to give that a try, you can either create your own image with my Dockerfile or get in touch and I’d be happy to provide you with access to my private repo where a “build image” based on the current devpreview is available. My sample relies on AppVeyor but the image itself should be fully platform agnostic and you could use it on any platform and with any (Extension v2) project. While kind of turning the original multi-stage build idea around by making a big production image into a small build image, this still show well multi-stage builds help in tailoring your images exactly to your needs.

- Therefore Microsoft released Windows Server Core and Nanoserver

- and while you’re at it, go and marvel at OpenFaaS, an amazing project also created by Alex Ellis